|

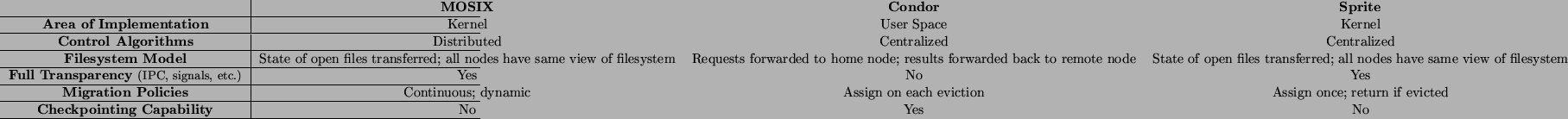

In this section we will explore some of the similarities and differences in the design decisions among several systems that support process migration. Specifically, we will examine MOSIX [12], developed at the Hebrew University of Jerusalem, Israel; Condor [14], developed at the University of Wisconsin-Madison; and Sprite [13], developed at the University of California at Berkeley. A summary of each system's primary design decisions is presented in Table 1. Each of these decisions is discussed in more detail below.

Condor, MOSIX, and Sprite appear similar on the surface in that they all have implemented a process migration. Underneath, however, each of the three systems is trying to solve a different problem. The design decisions that went into the systems are necessarily different, because the designs were based on different assumptions.

MOSIX might be best described as an attempt to create a low-cost equivalent of a scalable, SMP (multi-CPU) server. In an SMP system such as the Digital AlphaServer or SGI Challenge, multiple CPUs are tightly coupled, and the operating system can do very fine-grained load balancing across those CPUs. In an SMP, any job can be scheduled to any processor with virtually no overhead. MOSIX, similarly, attempts to implement very low-overhead process migration so that the multicomputer, taken as a whole, might be capable of fine-grained load balancing akin to an SMP. The MOSIX designers have expended a great deal of effort implementing very fast network protocols, optimizing network device drivers, and doing other analyses to push the performance of their network as far as possible.

Also in line with the SMP model, MOSIX goes to great lengths to maintain the same semantics of a centralized OS from the point of view of both processes and users. Even when a process migrates, signal semantics remain the same, IPC channels such as pipes and TCP/IP sockets can still be used, and the process still appears to be on its ``home node'' according to programs such as ps.

As a result, the MOSIX implementation typically takes the form of a ``pool of processors''--a large number of CPUs dedicated to acting as migration targets for high-throughput scientific computing. Although MOSIX can be used to borrow idle cycles from unused desktop workstations, that mode of operation is not its primary focus.

In contrast, Condor's primary motivation for process migration seems to be to provide a graceful way for processes that were using idle CPU cycles on a foreign machine to be evicted from that machine when it is no longer idle. They made many simplifying assumptions; for example, that the remotely-executing processes will be running in a vacuum, not requiring contact with other processes via IPC channels. Their migration strategy does not provide a fully transparent migration model; processes ``know'' that they are running on a foreign machine, and the home machine has no record of the process' existence. These assumptions, while more limiting than the MOSIX model, do buy a fair amount of simplicity: Condor's designers were able to implement its process migration without modifying the kernel.

In motivation, Sprite seems to be a cross between Condor and MOSIX. Like MOSIX, Sprite strives for a very pure migration model--one in which the semantics of the process are almost exactly the same as if the process had been running locally. IPC channels, signal semantics, and error transparency are all important to the Sprite design. However, their migration policy is much more akin to Condor's. Similar to Condor, they seem primarily motivated by the desire to gracefully evict processes from machines which are no longer idle. When processes are first created with exec(), they are migrated to idle workstations if possible; later, they are migrated again only if the workstation owner returns and evicts the process. Unlike MOSIX, Sprite has no desire to dynamically re-balance the load on systems once processes have been assigned to processors.

In this section, we will explore some of the specific design decisions of the three systems in more detail.

User Space vs. Kernel Implementation. Sprite and MOSIX both involve extensive modifications to their respective kernels to support process migration. Amazingly, Condor is a process migration scheme that is implemented in user-space. Although no source code changes are necessary, users do need to link their programs with Condor's process migration library. The library intercepts certain system calls in cases where it needs to record state about the system call. The library also sets up a signal handler so that it can respond to signals from daemons running on the machines, telling it to checkpoint itself and terminate.

Centralized vs. Distributed Control. Condor and Sprite both rely on a centralized controller, which limits those systems' scalability and introduces a single point of failure for the entire system. In contrast, MOSIX nodes are all autonomous, and each uses a novel probabilistic information distribution algorithm to gather information from a randomly selected (small) subset of the other nodes in the system. This makes MOSIX much more scalable, and its completely decentralized control makes it more robust in the face of failures.

Filesystem Model. Condor does not assume that filesystems available on a process' home machine are also available on the target machine when a process migrates. Instead, it forwards all filesystem requests back to the home machine, which fulfills the request and forwards the results back to the migration target. In contrast, Sprite has a special cluster-wide network file system; it can assume that the same filesystem is available on every migration target. Sprite forwards the state of open files to the target machines and filesystem requests are carried out locally.2 Similar to Sprite, MOSIX assumes that the same filesystem will be globally available, but MOSIX uses standard NFS.

Fully Transparent Execution Location. MOSIX and Sprite support what might be called ``full transparency'': in these systems, the process still appears to be running on the home node regardless of its actual execution location. The process itself always thinks that it's running on its home node, even if it migrated and is actually running on some other node. This has several important side effects. For example, IPC channels such TCP/IP connections, named pipes, and the like, are maintained in MOSIX and Sprite despite migration of processes on either end of the IPC channel. Data is received at the communications endpoint--i.e., a process' home node--where it is then forwarded to the node on which the process is actually running. In contrast, Condor does not support such a strong transparency model; a Condor process that migrates appears to be running on the migration target. For this reason, migration of processes that are involved in IPC is not allowed.

Migration Policies. As mentioned earlier, MOSIX attempts to dynamically balance the load continuously throughout the lifetime of all running processes, in an attempt to maximize the overall CPU utilization of the cluster. Sprite schedules a process to an idle processor once, when the process is born, and migrates that process back to its home node if the foreign node's owner returns. Once an eviction has occurred, Sprite does not re-migrate the evicted process to another idle processor. Condor falls somewhere in between these two. Like Sprite, Condor assigns a process to an idle node when the jobs is created. However, unlike Sprite, Condor attempts to find another idle node for the process every time it gets evicted. Absent of evictions, Condor does not attempt to dynamically re-balance the load as MOSIX does.

Checkpointing Capability. The mechanics of Condor's process migration implementation are such that the complete state of the process is written to disk when a migration occurs. After the process state is written to disk, the process is terminated, the state transferred to a new machine, and the process reconstructed. This implementation has a useful side effects. For example, the process state file can be saved. Saving it has the effect of ``checkpointing'' the process, so that it can be restarted from a previous point in case of a hardware failure or other abnormal termination. The frozen process can also be queued; who is to say that the frozen process has to be restarted immediately? The state file, with its process in stasis, can be kept indefinitely--perhaps waiting for another idle processor to become available before restarting. The MOSIX and Sprite implementations are generally memory-to-memory and preclude these interesting possibilities.

Y>X Z>X